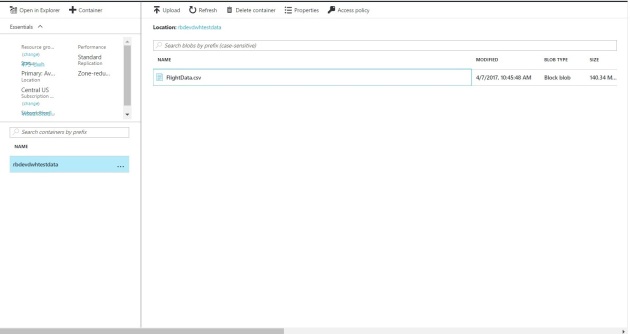

data : https://rbdevdwhstorage.blob.core.windows.net/rbdevdwhtestdata/FlightData.csv

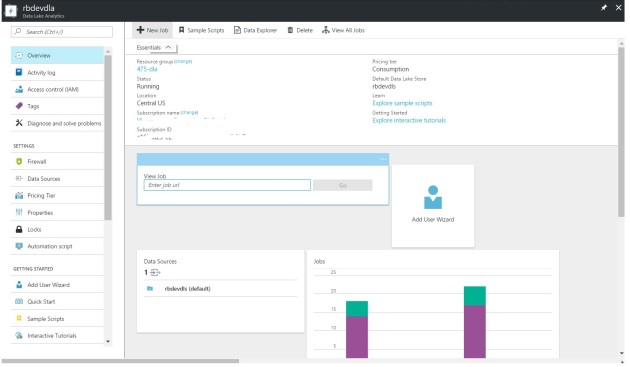

In my 3rd example, I explored SQL Data warehouse on AZURE.

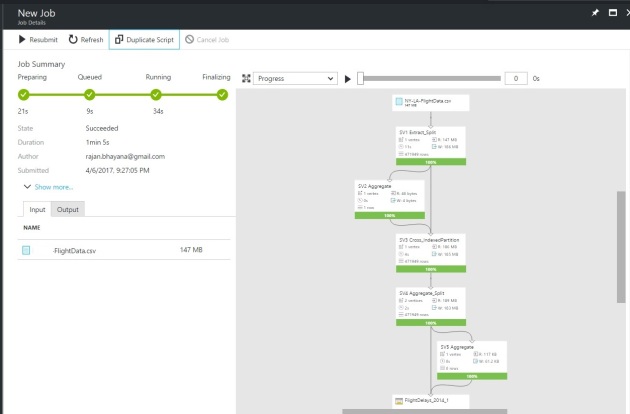

The idea was to take a half a million rows CSV and then process it using SQL Data Warehouse and see the performance.

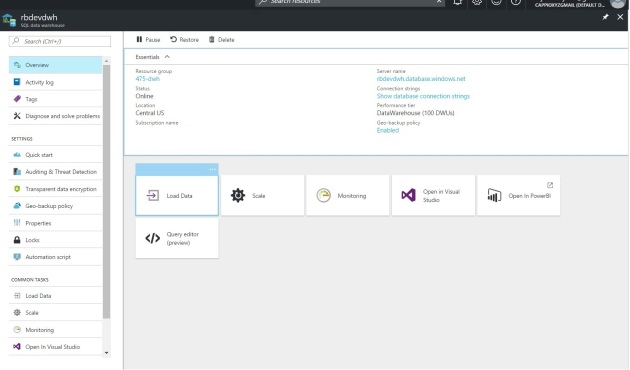

I provisioned one azure storage where i saved my test data and then one azure sql data warehouse of 100 dTUs. (least in data warehouses).

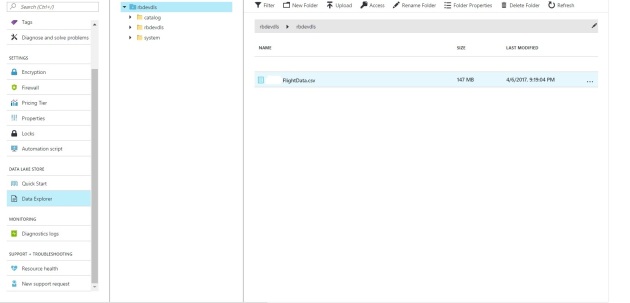

Snapshot of data stored to Azure Storage –

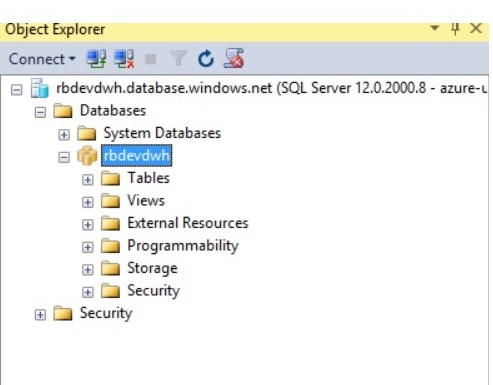

Azure SQL Data Warehouse. Copy the Server Name from here and open management studio.

Using SQL Management studio, connect to this data warehouse like any MSSQL database.

Once you are connected, open new query windows.

First step is to securely connect to the source data, here a csv with half a million rows.

CREATE MASTER KEY;

CREATE DATABASE SCOPED CREDENTIAL AzureStorageCreds

WITH IDENTITY = ‘[identityName]’

, Secret = ‘[azureStorageAccountKey]’

;CREATE EXTERNAL DATA SOURCE azure_storage

WITH

(

TYPE = HADOOP

, LOCATION =’wasbs://[containername]@[accountname].blob.core.windows.net/[path]’

, CREDENTIAL = AzureStorageCreds

);

next define the format of the file we will be reading

CREATE EXTERNAL FILE FORMAT text_file_format

WITH

(

FORMAT_TYPE = DELIMITEDTEXT

, FORMAT_OPTIONS (

FIELD_TERMINATOR =’,’,

STRING_DELIMITER = ‘”‘,

USE_TYPE_DEFAULT = TRUE

)

);

next step is to make an external table, which is kind of a view to our source data

CREATE EXTERNAL TABLE FlightData

(

[Year] varchar(255),

[Quarter] varchar(255),

[Month] varchar(255),

[DayofMonth] varchar(255),

[DayOfWeek] varchar(255),

FlightDate varchar(255),

UniqueCarrier varchar(255),

AirlineID varchar(255),

Carrier varchar(255),

TailNum varchar(255),

FlightNum varchar(255),

OriginAirportID varchar(255),

OriginAirportSeqID varchar(255),

OriginCityMarketID varchar(255),

Origin varchar(255),

OriginCityName varchar(255),

OriginState varchar(255),

OriginStateFips varchar(255),

OriginStateName varchar(255),

OriginWac varchar(255),

DestAirportID varchar(255),

DestAirportSeqID varchar(255),

DestCityMarketID varchar(255),

Dest varchar(255),

DestCityName varchar(255),

DestState varchar(255),

DestStateFips varchar(255),

DestStateName varchar(255),

DestWac varchar(255),

CRSDepTime varchar(128),

DepTime varchar(128),

DepDelay varchar(128),

DepDelayMinutes varchar(128),

DepDel15 varchar(255),

DepartureDelayGroups varchar(255),

DepTimeBlk varchar(255),

TaxiOut varchar(255),

WheelsOff varchar(255),

WheelsOn varchar(255),

TaxiIn varchar(255),

CRSArrTime varchar(128),

ArrTime varchar(128),

ArrDelay varchar(255),

ArrDelayMinutes varchar(255),

ArrDel15 varchar(255),

ArrivalDelayGroups varchar(255),

ArrTimeBlk varchar(255),

Cancelled varchar(255),

CancellationCode varchar(255),

Diverted varchar(255),

CRSElapsedTime varchar(255),

ActualElapsedTime varchar(255),

AirTime varchar(255),

Flights varchar(255),

Distance varchar(255),

DistanceGroup varchar(255),

CarrierDelay varchar(255),

WeatherDelay varchar(255),

NASDelay varchar(255),

SecurityDelay varchar(255),

LateAircraftDelay varchar(255),

FirstDepTime varchar(255),

TotalAddGTime varchar(255),

LongestAddGTime varchar(255),

DivAirportLandings varchar(255),

DivReachedDest varchar(255),

DivActualElapsedTime varchar(255),

DivArrDelay varchar(255),

DivDistance varchar(255),

Div1Airport varchar(255),

Div1AirportID varchar(255),

Div1AirportSeqID varchar(255),

Div1WheelsOn varchar(255),

Div1TotalGTime varchar(255),

Div1LongestGTime varchar(255),

Div1WheelsOff varchar(255),

Div1TailNum varchar(255),

Div2Airport varchar(255),

Div2AirportID varchar(255),

Div2AirportSeqID varchar(255),

Div2WheelsOn varchar(255),

Div2TotalGTime varchar(255),

Div2LongestGTime varchar(255),

Div2WheelsOff varchar(255),

Div2TailNum varchar(255),

Div3Airport varchar(255),

Div3AirportID varchar(255),

Div3AirportSeqID varchar(255),

Div3WheelsOn varchar(255),

Div3TotalGTime varchar(255),

Div3LongestGTime varchar(255),

Div3WheelsOff varchar(255),

Div3TailNum varchar(255),

Div4Airport varchar(255),

Div4AirportID varchar(255),

Div4AirportSeqID varchar(255),

Div4WheelsOn varchar(255),

Div4TotalGTime varchar(255),

Div4LongestGTime varchar(255),

Div4WheelsOff varchar(255),

Div4TailNum varchar(255),

Div5Airport varchar(255),

Div5AirportID varchar(255),

Div5AirportSeqID varchar(255),

Div5WheelsOn varchar(255),

Div5TotalGTime varchar(255),

Div5LongestGTime varchar(255),

Div5WheelsOff varchar(255),

Div5TailNum varchar(255)

)

WITH

(

LOCATION = ‘/’,

DATA_SOURCE = azure_storage,

FILE_FORMAT = text_file_format,

REJECT_TYPE = value,

REJECT_VALUE = 100000

);

Once this done, we will run one final command to pull data to data warehouse

CREATE TABLE FlightDataStaging

WITH (DISTRIBUTION = ROUND_ROBIN)

AS

SELECT * FROM FlightData

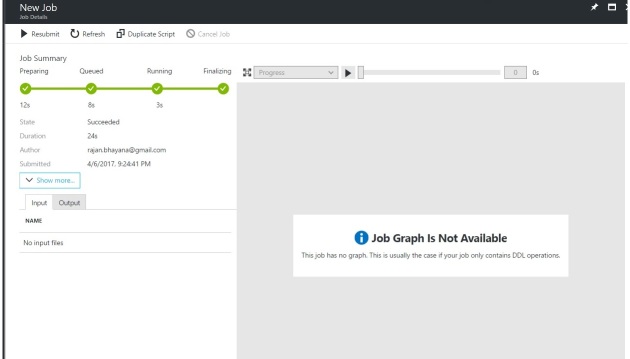

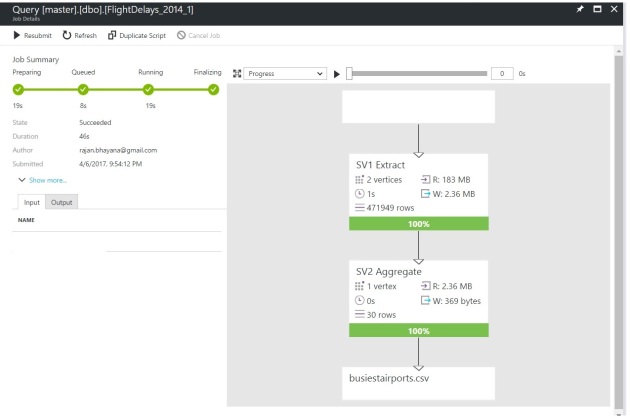

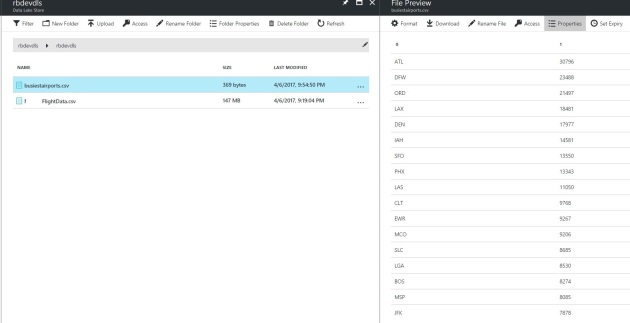

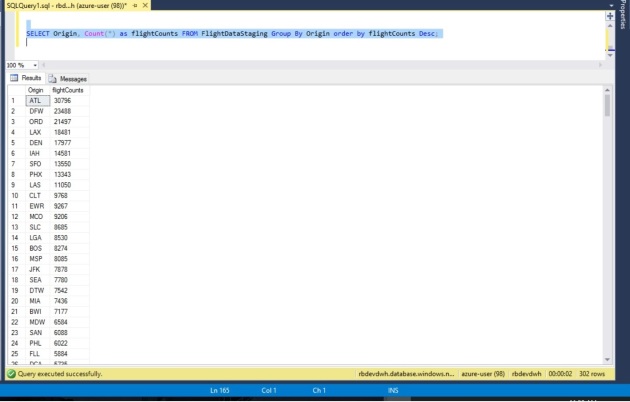

Once this is done, lets run a group by command on all the data and see how long it takes.

you will notice it barely took 2 seconds.

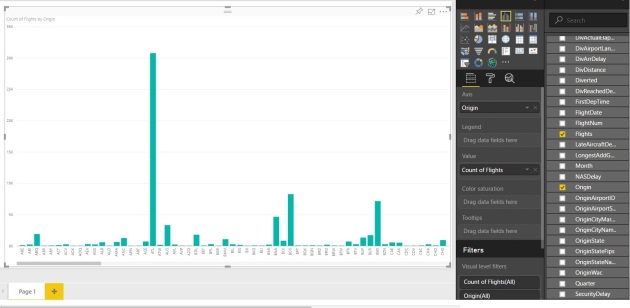

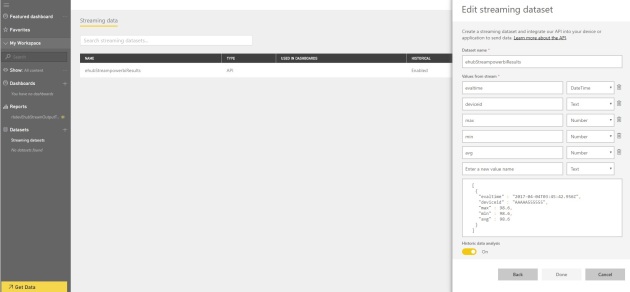

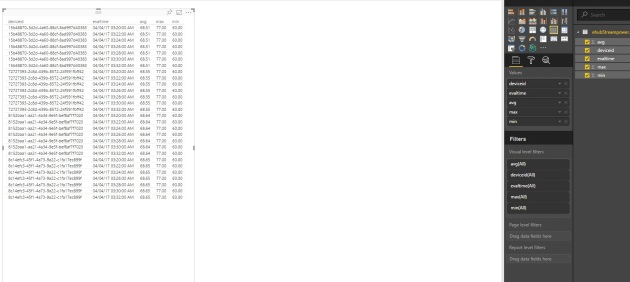

If we want, we can also link our data warehouse to powerBI , and display results.

I have tried to run similar report as above and it came up in no time.